| Use Cases |

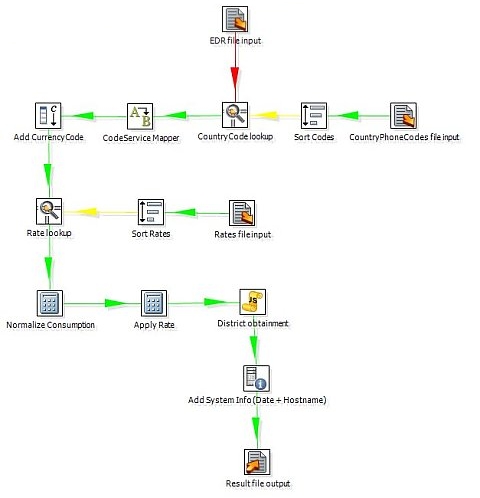

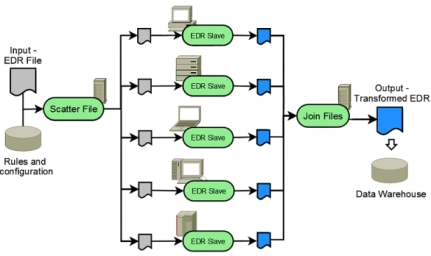

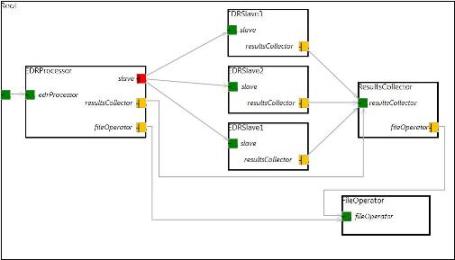

EDR Processing (Extended Data Record): see Plain Component Video and Autonomic Version Video

|

|||||||||||||||||||||||||||||||

| Before | After |

| Single platform, expensive specific hardware |

Multi platform, low-cost hardware |

| Changes in deployment need code changes | XML-based configurable deployment |

| Ad hoc design |

Component based top-down design, applying composition |

| High management costs |

Automatic management, providing redundancy and fault tolerance |

| Difficult and expensive to scale |

Cheap and easy to scale |

| Commercial, closed source |

Free, open source framework and libraries based |

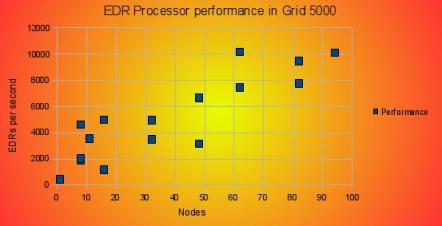

Benchmarks

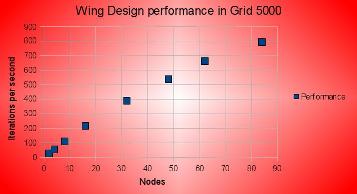

Grid 5000 offers the following results:

- Performance scales close to linearly when increasing the number of nodes

-

Given the data-intensive nature of the application, its performance is dependant on the load of the network and the size of the file fragments distributed and processed. This is the reason for the observed variability in some of the results.

- Real world use case: very common in Telecom companies

- EDR Processing is a data intensive application, involving large amounts of information being exchanged among nodes in a grid infrastructure.

- Redundancy and fault tolerance are a requirement of this use case.

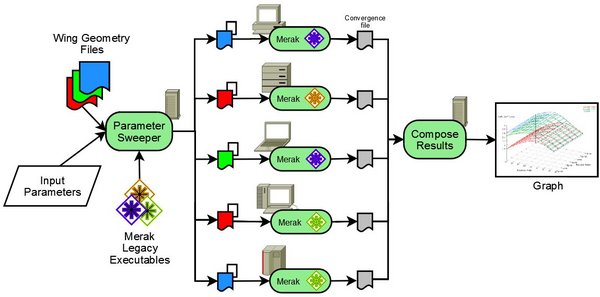

Objective Merak, manages small amounts of information, but needs lots of computing power. Our objective is to use GridCOMP solution to wrap and grid-enable this existing legacy code and also to prove the integration of data staging for the input files and output files into this sweeping / optimization process. Proving both of these objectives is crucial for the adoption of the GCM by the industry.

Objective Merak, manages small amounts of information, but needs lots of computing power. Our objective is to use GridCOMP solution to wrap and grid-enable this existing legacy code and also to prove the integration of data staging for the input files and output files into this sweeping / optimization process. Proving both of these objectives is crucial for the adoption of the GCM by the industry.

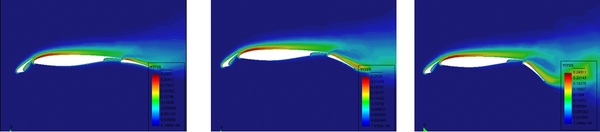

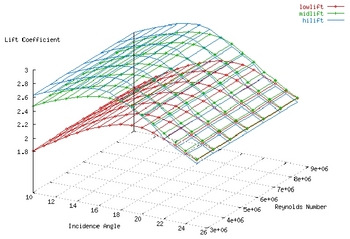

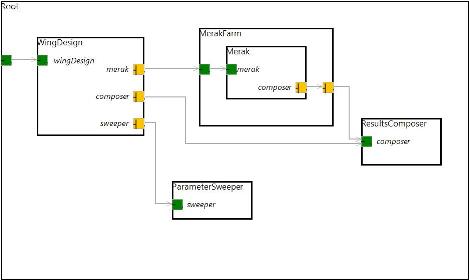

- Architectural design Using GridCOMP, the Merak application receives several parameters (a range of incidence angles and Reynolds numbers and set of .geo files containing the geometry of a three-element airfoil). The Merak legacy code executable files are provisioned among the nodes; then, the application generates and distributes the combinations of incidence angles, Reynolds numbers and geometries to process. Each node runs merak using the given parameters, and transfers the results back. The application extracts the desired information from the result files and creates the graph comparing the lift coefficient of the different geometries.

- The GridCOMP Solution

- Legacy code wrapping support

- Composition of components to combine new and pre-existing components

- Collective interfaces that abstract and hide the complexity of distributed computing

- Autonomic component management that provides fault tolerance and load balancing

- Tools to design the architecture of the grid application (GIDE)

- The GridCOMP computation results in:

- Parallel computation to achieve useful response times (minutes)

- Easy deployment of legacy code.

- Enabling the re-usability of legacy code in modern, component-based, grid applications.

| Before | After |

| Legacy application, obsolete code, hardly reusable |

Legacy code wrapped component, ready to be reused in new applications |

| Long response time, due to serial execution |

Distribued execution renders reduced response time |

| Manual management of failed executions |

Automatic management, providing redundancy and fault tolerance |

| Buying faster hardware as the only way to scale up (due to serial execution) |

Sclaping up means adding more low cost hardware |

- Real, legacy code application from aerospace sector.

- Embarrassingly parallel application.

- CPU intensive processing

2-IBM Use Case

Biometric Identification: see the video

- Objective The objective of the use case is to build a biometric identification system (BIS), based on fingerprint biometrics, which can work on a large user population of up to millions of individuals. To achieve real-time identification within a few seconds period the BIS application takes advantage of the Grid via GCM components. The goal is to simplify the programming of the distributed identification process though the use of the GCM framework while being able to deploy the resulting application on arbitrary existing, potentially heterogeneous, hardware platforms.

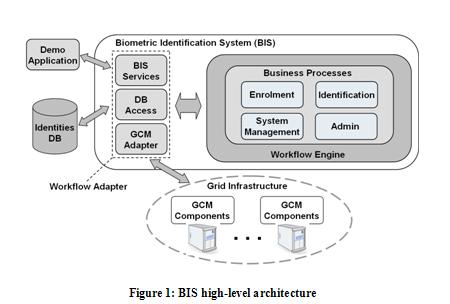

Architectural design The BIS use case can be considered a business-process or workflow-driven application. Figure 1 outlines the high-level architectural design of the BIS. It is built around a workflow execution engine acting as the central control unit of the system. A number of business processes are implemented as workflow scripts running within the engine. The processes comprise functionality accessible from the demo application (e.g. identification) as well as internal system management logic required to control the distributed biometric matching. Furthermore, the BIS provides a number of adapters to the workflow engine such that the business processes can interact with external entities, namely, the database (DB) storing information about enrolled identities, and the interface to the Grid infrastructure.

Architectural design The BIS use case can be considered a business-process or workflow-driven application. Figure 1 outlines the high-level architectural design of the BIS. It is built around a workflow execution engine acting as the central control unit of the system. A number of business processes are implemented as workflow scripts running within the engine. The processes comprise functionality accessible from the demo application (e.g. identification) as well as internal system management logic required to control the distributed biometric matching. Furthermore, the BIS provides a number of adapters to the workflow engine such that the business processes can interact with external entities, namely, the database (DB) storing information about enrolled identities, and the interface to the Grid infrastructure.

The GCM adapter (c.f. Figure 1) is triggered by the workflow scripts and provides distributed biometric matching functionality via GCM components. Here, the basic approach is to have one component encapsulating the biometric matching functionality, which is then deployed on all Grid nodes in a SPMD-style setting. Then the database of enrolled identities is distributed across the nodes and this way the 1:N matching operation is executed in parallel.

- The GridCOMP Solution offers to the BIS application:

- A platform independent high-level component framework for programming distributed applications.

- Advanced built-in features such as hierarchical composition, collective interfaces, virtual nodes, deployment descriptors, and support for autonomic management hiding the complexity of Grid programming.

- The GIDE, a comprehensive tool set supporting the complete software development cycle from graphical composition to component deployment and monitoring.

- The GridCOMP computation results in:

- An identification system that can work on a large user population in real-time.

- An identification system that can be easily deployed to arbitrary existing hardware and thus is cost-efficient.

- A system that is easily scalable without any software change.

- An efficient software architecture (e.g. hierarchical components, strict separation of concerns) leading to reduced development time and component reuse.

Before and After GridCOMP

With respect to other Grid middleware, the level of abstraction provided by the GridCOMP framework is unique. For the first time, it offers a hierarchical Grid specific component system where components themselves can be distributed (e.g. can span multiple distributed computers). This in combination with the multitude of additional advanced features such as collective interfaces or behavioural skeletons eases the development of Grid applications to a level that has not been available before. For example, we had never envisioned to completely develop the BIS application without considering the target hardware infrastructure, and then, later deploying it to a heterogeneous infrastructure without changing a single line of source code. The GridCOMP framework made this possible.

Performance

In terms of execution performance, it was not possible to compare the BIS application to another version not using the GridCOMP framework since such a version does not exist. Nevertheless, no negative impact on performance due to the GridCOMP framework has been encountered. As expected, the identification performance does scale linearly relative to the number of nodes used. This is due to the fact that the solution does not involve significant amount of communication between remote resources. Therefore, the communication time can be neglected compared to the actual computing time on the nodes.

In terms of infrastructure independence and software development efficiency – the actual aim for which the framework has been developed - the GridCOMP framework has performed very well. It abstracts away the complexity of Grid programming and includes a lot of functionality which otherwise would need to be developed from scratch. This reduces the skills required as well as time to market for Grid application development. For instance, the initial version of the BIS application including the basic distributed identification functionality has required only about 2000 lines of code to be written. Then, in the second version, autonomic management features have been added which enable the BIS to adapt its execution performance with respect to a dynamically configurable quality of service contract. Adding these features increased the code size of the BIS by only about 300 lines of code thanks to the use of autonomic behavioural skeletons which are part of the GridCOMP framework. Overall, the use case has shown that once developers are skilled in using the framework the efficiency in developing distributed applications becomes very high. Furthermore, the strict separation of concerns, one of the core design aspects of the GridCOMP framework, has proven very helpful when the use case application was deployed on Grid5000 without modifying the source code. Only deployment descriptors had to be adapted to run it on another infrastructure.

Why BIS as GridCOMP use case?

There are several reasons why the BIS has been chosen as a use case for GridCOMP. Firstly, because it is considered a business process application which is centrally driven by a workflow engine. This makes it somewhat different to traditional Grid applications often coming from the scientific computing domain. The idea was to attract people coming from other application domains (e.g. BPM/workflow, security etc.) to look into the demo and find out what advanced Grid middleware could do for them. Secondly, it is a good test case to evaluate how the framework integrates with workflow systems. Thirdly, the BIS is somewhat different to the other use cases. Not only because it a workflow driven but also because it represents a data parallel problem rather than a task-parallel one. Finally, the idea actually goes back to a previous customer request asking if such a system, which could work on arbitrary hardware infrastructures could be built. This makes it a real world problem.

3- Atos Origin Use Case

Computing of DSO value: see Video 1 and Video 2

The Use Case selected by Atos Origin uses PL/SQL-based source code, and the candidate application selected was the so called “Computing of DSO value”. The DSO (Days Sales Outstanding) is the mean time that clients delay to pay an invoice to Atos. This information is needed by several internal departments as much updated as possible and the process lasts about 4 hours to compute around 6.600 clients.

-

Objective: The objective to use GridCOMP is to reduce the execution time without upgrading the infrastructure. Some of the benefits implementing using GridCOMP is the possibility to update the information more frequently and maintain or reduce infrastructure cost.

-

Architectural design: The DSO application is based on a client/server application and there are three main elements:

- A Graphical User Interface (GUI) used to enter some input data or parameters needed for the computation. This GUI runs on the client side and connects to the Database;

- Some PL/SQL processes which are called normally by this user interface in order to access the data stored in the database and process them to compute the results. This part runs on the server side, and it is executed by the database engine;

- The Database which stores the data.

The architecture proposed to use with the Atos Origin use case is to put the main program (using ProActive) between the user interface and the database, as the orchestrator of the whole application. In this case, the user sends a request to the main program, asking for the whole workflow to be executed. The main program connects to the main database and read some data (tasks) to send to the remote nodes to be executed in parallel. The remote nodes compute the information received and send the result. Them the main program writes the result in the main database finishing the process. The master database stores all data and only some part of the information are sent to the remote node. Each node contains a database engine to store part of the data sent by the scheduler and to start the PL/SQL code process.

The GridCOMP Solution

GridCOMP solution offers to the DSO application:

- A grid-computing component-based model that is fully portable because it is solely based on Java.

- Complexity of Grid programming hided in features such as composition of components, deployment descriptors, virtual nodes, collective interfaces, autonomic management.

- An easy way to develop new applications using Grid components.

The GridCOMP computation results in:

- Reduce the execution time without upgrading the infrastructure.

- Update the information more frequently and maintain or reduce infrastructure cost.

- The ability to scale up easily by just adding more low-profile machines and without changing the application.

- A system that is easily scalable without any software change

| Before | After |

| Process lasts about 4 hours complete (over 6.000 clients) | Reduce execution time |

| Information not updated frequently |

Information updated more frequently Maintain/Reduce infrastructure costs |

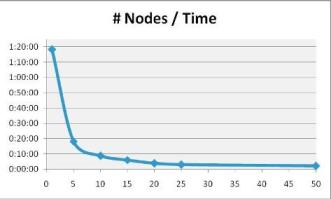

Benchmarks

Some test were performed on top of Grid5000 platform to measure the benefits using a grid solution. As you can see at chart bellow, the calculation time spent using 25 nodes if almost 96% less than using only one node.

Selection strategy

Several criteria were defined in order to categorize the potential use cases and then select the most appropriate as a GridCOMP Use Case. These criteria cover not only the technical feasibility of the Use Case but also some commercial aspects, as:

- Atos Origin interest in the results

- Technical support availability

- Source/object code constraints

- Hardware constraints

- Ability of the project middleware to support Application code wrapping

- Feasibility in the timeframe

- Complementary to the other GridCOMP Use Cases

Use Cases

Use Cases